Controlling Stochastic Differential Equations

In this tutorial, we show how to use SciMLSensitivity to control the time evolution of a system described by a stochastic differential equation (SDE). Specifically, we consider a continuously monitored qubit described by an SDE in the Ito sense with multiplicative scalar noise (see [1] for a reference):

\[dψ = b(ψ(t), Ω(t))ψ(t) dt + σ(ψ(t))ψ(t) dW_t .\]

We use a predictive model to map the quantum state of the qubit, ψ(t), at each time to the control parameter Ω(t) which rotates the quantum state about the x-axis of the Bloch sphere to ultimately prepare and stabilize the qubit in the excited state.

Copy-Pasteable Code

Before getting to the explanation, here's some code to start with. We will follow a full explanation of the definition and training process:

# load packages

import SciMLSensitivity as SMS, Optimization as OPT, OptimizationOptimisers as OPO

import StochasticDiffEq as SDE, DiffEqCallbacks as DEC, DiffEqNoiseProcess as DNP

import Zygote, Statistics, LinearAlgebra as LA, Random

import Lux, Random, ComponentArrays as CA

import Plots

rng = Random.default_rng()

#################################################

lr = 0.01f0

epochs = 100

numtraj = 16 # number of trajectories in parallel simulations for training

numtrajplot = 32 # .. for plotting

# time range for the solver

dt = 0.0005f0

tinterval = 0.05f0

tstart = 0.0f0

Nintervals = 20 # total number of intervals, total time = t_interval*Nintervals

tspan = (tstart, tinterval * Nintervals)

ts = Array(tstart:dt:(Nintervals * tinterval + dt)) # time array for noise grid

# Hamiltonian parameters

Δ = 20.0f0

Ωmax = 10.0f0 # control parameter (maximum amplitude)

κ = 0.3f0

# loss hyperparameters

C1 = Float32(1.0) # evolution state fidelity

struct Parameters{flType, intType, tType}

lr::flType

epochs::intType

numtraj::intType

numtrajplot::intType

dt::flType

tinterval::flType

tspan::tType

Nintervals::intType

ts::Vector{flType}

Δ::flType

Ωmax::flType

κ::flType

C1::flType

end

myparameters = Parameters{typeof(dt), typeof(numtraj), typeof(tspan)}(lr, epochs, numtraj,

numtrajplot, dt,

tinterval, tspan,

Nintervals, ts,

Δ, Ωmax, κ, C1)

################################################

# Define Neural Network

# state-aware

nn = Lux.Chain(Lux.Dense(4, 32, Lux.relu),

Lux.Dense(32, 1, tanh))

p_nn, st = Lux.setup(rng, nn)

p_nn = CA.ComponentArray(p_nn)

###############################################

# initial state anywhere on the Bloch sphere

function prepare_initial(dt, n_par)

# shape 4 x n_par

# input number of parallel realizations and dt for type inference

# random position on the Bloch sphere

theta = acos.(2 * rand(typeof(dt), n_par) .- 1) # uniform sampling for cos(theta) between -1 and 1

phi = rand(typeof(dt), n_par) * 2 * pi # uniform sampling for phi between 0 and 2pi

# real and imaginary parts ceR, cdR, ceI, cdI

u0 = [

cos.(theta / 2),

sin.(theta / 2) .* cos.(phi),

false * theta,

sin.(theta / 2) .* sin.(phi)

]

return vcat(transpose.(u0)...) # build matrix

end

# target state

# ψtar = |up>

u0 = prepare_initial(myparameters.dt, myparameters.numtraj)

###############################################

# Define SDE

function qubit_drift!(du, u, p, t)

# expansion coefficients |Ψ> = ce |e> + cd |d>

ceR, cdR, ceI, cdI = u # real and imaginary parts

# Δ: atomic frequency

# Ω: Rabi frequency for field in x direction

# κ: spontaneous emission

Δ, Ωmax, κ = p.myparameters

nn_weights = p.p_nn

Ω = (nn(u, nn_weights, st)[1] .* Ωmax)[1]

@inbounds begin

du[1] = 1 // 2 * (ceI * Δ - ceR * κ + cdI * Ω)

du[2] = -cdI * Δ / 2 + 1 * ceR * (cdI * ceI + cdR * ceR) * κ + ceI * Ω / 2

du[3] = 1 // 2 * (-ceR * Δ - ceI * κ - cdR * Ω)

du[4] = cdR * Δ / 2 + 1 * ceI * (cdI * ceI + cdR * ceR) * κ - ceR * Ω / 2

end

return nothing

end

function qubit_diffusion!(du, u, p, t)

ceR, cdR, ceI, cdI = u # real and imaginary parts

κ = p.myparameters[end]

du .= false

@inbounds begin

#du[1] = zero(ceR)

du[2] += sqrt(κ) * ceR

#du[3] = zero(ceR)

du[4] += sqrt(κ) * ceI

end

return nothing

end

# normalization callback

condition(u, t, integrator) = true

function affect!(integrator)

integrator.u .= integrator.u / LA.norm(integrator.u)

end

callback = DEC.DiscreteCallback(condition, affect!, save_positions = (false, false))

CreateGrid(t, W1) = DNP.NoiseGrid(t, W1)

Zygote.@nograd CreateGrid #avoid taking grads of this function

# set scalar random process

W = sqrt(myparameters.dt) * randn(typeof(myparameters.dt), size(myparameters.ts)) #for 1 trajectory

W1 = cumsum([zero(myparameters.dt); W[1:(end - 1)]], dims = 1)

NG = DNP.NoiseGrid(myparameters.ts, W1)

# get control pulses

p_all = CA.ComponentArray(; p_nn,

myparameters = [myparameters.Δ, myparameters.Ωmax, myparameters.κ])

# define SDE problem

prob = SDE.SDEProblem{true}(

qubit_drift!, qubit_diffusion!, vec(u0[:, 1]), myparameters.tspan,

p_all;

callback, noise = NG)

#########################################

# compute loss

function g(u, p, t)

ceR = @view u[1, :, :]

cdR = @view u[2, :, :]

ceI = @view u[3, :, :]

cdI = @view u[4, :, :]

p[1] *

Statistics.mean((cdR .^ 2 + cdI .^ 2) ./ (ceR .^ 2 + cdR .^ 2 + ceI .^ 2 + cdI .^ 2))

end

function loss(p_nn; alg = SDE.EM(), sensealg = SMS.BacksolveAdjoint(autojacvec = SMS.ReverseDiffVJP()))

pars = CA.ComponentArray(; p_nn,

myparameters = [myparameters.Δ, myparameters.Ωmax, myparameters.κ])

u0 = prepare_initial(myparameters.dt, myparameters.numtraj)

function prob_func(prob, i, repeat)

# prepare initial state and applied control pulse

u0tmp = deepcopy(vec(u0[:, i]))

W = sqrt(myparameters.dt) * randn(typeof(myparameters.dt), size(myparameters.ts)) #for 1 trajectory

W1 = cumsum([zero(myparameters.dt); W[1:(end - 1)]], dims = 1)

NG = DNP.NoiseGrid(myparameters.ts, W1)

SDE.remake(prob; u0 = u0tmp, callback, noise = NG)

end

_prob = SDE.remake(prob, p = pars)

ensembleprob = SDE.EnsembleProblem(_prob; prob_func, safetycopy = true)

_sol = SDE.solve(ensembleprob, alg, SDE.EnsembleSerial();

sensealg,

saveat = myparameters.tinterval,

myparameters.dt,

adaptive = false,

trajectories = myparameters.numtraj, batch_size = myparameters.numtraj)

A = convert(Array, _sol)

l = g(A, [myparameters.C1], nothing)

# returns loss value

return l

end

#########################################

# visualization -- run for new batch

function visualize(p_nn; alg = SDE.EM())

u0 = prepare_initial(myparameters.dt, myparameters.numtrajplot)

pars = CA.ComponentArray(; p_nn,

myparameters = [myparameters.Δ, myparameters.Ωmax, myparameters.κ])

function prob_func(prob, i, repeat)

# prepare initial state and applied control pulse

u0tmp = deepcopy(vec(u0[:, i]))

W = sqrt(myparameters.dt) * randn(typeof(myparameters.dt), size(myparameters.ts)) #for 1 trajectory

W1 = cumsum([zero(myparameters.dt); W[1:(end - 1)]], dims = 1)

NG = DNP.NoiseGrid(myparameters.ts, W1)

SDE.remake(prob; p = pars, u0 = u0tmp, callback, noise = NG)

end

ensembleprob = SDE.EnsembleProblem(prob; prob_func, safetycopy = true)

u = SDE.solve(ensembleprob, alg, SDE.EnsembleThreads();

saveat = myparameters.tinterval,

myparameters.dt,

adaptive = false, #abstol=1e-6, reltol=1e-6,

trajectories = myparameters.numtrajplot,

batch_size = myparameters.numtrajplot)

ceR = @view u[1, :, :]

cdR = @view u[2, :, :]

ceI = @view u[3, :, :]

cdI = @view u[4, :, :]

infidelity = @. (cdR^2 + cdI^2) / (ceR^2 + cdR^2 + ceI^2 + cdI^2)

meaninfidelity = Statistics.mean(infidelity)

loss = myparameters.C1 * meaninfidelity

@info "Loss: " loss

fidelity = @. (ceR^2 + ceI^2) / (ceR^2 + cdR^2 + ceI^2 + cdI^2)

mf = Statistics.mean(fidelity, dims = 2)[:]

sf = Statistics.std(fidelity, dims = 2)[:]

pl1 = Plots.plot(0:(myparameters.Nintervals), mf,

ribbon = sf,

ylim = (0, 1), xlim = (0, myparameters.Nintervals),

c = 1, lw = 1.5, xlabel = "steps i", ylabel = "Fidelity", legend = false)

pl = Plots.plot(pl1, legend = false, size = (400, 360))

return pl, loss

end

# burn-in loss

l = loss(p_nn)

# callback to visualize training

visualization_callback = function (state, l; doplot = false)

println(l)

if doplot

pl, _ = visualize(state.u)

display(pl)

end

return false

end

# Display the ODE with the initial parameter values.

visualization_callback((; u = p_nn), l; doplot = true)

###################################

# training loop

@info "Start Training.."

# optimize the parameters for a few epochs with Adam on time span

# Setup and run the optimization

adtype = OPT.AutoForwardDiff()

optf = OPT.OptimizationFunction((x, p) -> loss(x), adtype)

optprob = OPT.OptimizationProblem(optf, p_nn)

res = OPT.solve(optprob, OPO.Adam(myparameters.lr),

callback = visualization_callback,

maxiters = 100)

# plot optimized control

visualization_callback(res, loss(res.u); doplot = true)falseStep-by-step description

Load packages

import SciMLSensitivity as SMS

import Optimization as OPT, OptimizationOptimisers as OPO, Zygote

import StochasticDiffEq as SDE, DiffEqCallbacks as DEC, DiffEqNoiseProcess as DNP

import Statistics, LinearAlgebra as LA

import Lux, Random, ComponentArrays as CA

import PlotsParameters

We define the parameters of the qubit and hyperparameters of the training process.

lr = 0.01f0

epochs = 100

numtraj = 16 # number of trajectories in parallel simulations for training

numtrajplot = 32 # .. for plotting

rng = Random.default_rng()

# time range for the solver

dt = 0.0005f0

tinterval = 0.05f0

tstart = 0.0f0

Nintervals = 20 # total number of intervals, total time = t_interval*Nintervals

tspan = (tstart, tinterval * Nintervals)

ts = Array(tstart:dt:(Nintervals * tinterval + dt)) # time array for noise grid

# Hamiltonian parameters

Δ = 20.0f0

Ωmax = 10.0f0 # control parameter (maximum amplitude)

κ = 0.3f0

# loss hyperparameters

C1 = Float32(1.0) # evolution state fidelity

struct Parameters{flType, intType, tType}

lr::flType

epochs::intType

numtraj::intType

numtrajplot::intType

dt::flType

tinterval::flType

tspan::tType

Nintervals::intType

ts::Vector{flType}

Δ::flType

Ωmax::flType

κ::flType

C1::flType

end

myparameters = Parameters{typeof(dt), typeof(numtraj), typeof(tspan)}(lr, epochs, numtraj,

numtrajplot, dt,

tinterval, tspan,

Nintervals, ts,

Δ, Ωmax, κ, C1)Main.Parameters{Float32, Int64, Tuple{Float32, Float32}}(0.01f0, 100, 16, 32, 0.0005f0, 0.05f0, (0.0f0, 1.0f0), 20, Float32[0.0, 0.0005, 0.001, 0.0015, 0.002, 0.0025, 0.003, 0.0035, 0.004, 0.0045 … 0.996, 0.9965, 0.997, 0.9975, 0.998, 0.9985, 0.999, 0.9995, 1.0, 1.0005], 20.0f0, 10.0f0, 0.3f0, 1.0f0)In plain terms, the quantities that were defined are:

lr= learning rate of the optimizerepochs= number of epochs in the training processnumtraj= number of simulated trajectories in the training processnumtrajplot= number of simulated trajectories to visualize the performancedt= time step for solver (initialdtif adaptive)tinterval= time spacing between checkpointstspan= time spanNintervals= number of checkpointsts= discretization of the entire time interval, used forNoiseGridΔ= detuning between the qubit and the laserΩmax= maximum frequency of the control laserκ= decay rateC1= loss function hyperparameter

Controller

We use a neural network to control the parameter Ω(t). Alternatively, one could also, e.g., use polynomials, interpolations, etc. but we use a neural network to demonstrate coolness factor and complexity.

# state-aware

nn = Lux.Chain(Lux.Dense(4, 32, Lux.relu),

Lux.Dense(32, 1, tanh))

p_nn, st = Lux.setup(rng, nn)

p_nn = CA.ComponentArray(p_nn)ComponentVector{Float32}(layer_1 = (weight = Float32[0.7738776 -0.1361403 -1.2393823 -0.2895503; 0.8233258 -1.3136221 0.56401503 -0.12431003; … ; 1.4705142 -1.4215223 -0.97312355 0.72412294; 0.095320925 -0.6921269 -1.569347 -1.0387102], bias = Float32[-0.21204835, 0.26290536, -0.2373411, -0.44051754, -0.22286832, 0.21562874, 0.063672125, 0.3075251, 0.2784888, 0.07772821 … 0.33488858, -0.43635827, 0.42147166, -0.26656097, -0.42832607, -0.028562248, -0.050834656, -0.42225856, 0.0037261248, 0.109496415]), layer_2 = (weight = Float32[-0.19246042 -0.46341136 … -0.16043316 -0.09594411], bias = Float32[-0.11019669]))Initial state

We prepare n_par initial states, uniformly distributed over the Bloch sphere. To avoid complex numbers in our simulations, we split the state of the qubit

\[ ψ(t) = c_e(t) (1,0) + c_d(t) (0,1)\]

into its real and imaginary part.

# initial state anywhere on the Bloch sphere

function prepare_initial(dt, n_par)

# shape 4 x n_par

# input number of parallel realizations and dt for type inference

# random position on the Bloch sphere

theta = acos.(2 * rand(typeof(dt), n_par) .- 1) # uniform sampling for cos(theta) between -1 and 1

phi = rand(typeof(dt), n_par) * 2 * pi # uniform sampling for phi between 0 and 2pi

# real and imaginary parts ceR, cdR, ceI, cdI

u0 = [

cos.(theta / 2),

sin.(theta / 2) .* cos.(phi),

false * theta,

sin.(theta / 2) .* sin.(phi)

]

return vcat(transpose.(u0)...) # build matrix

end

# target state

# ψtar = |e>

u0 = prepare_initial(myparameters.dt, myparameters.numtraj)4×16 Matrix{Float32}:

0.682755 0.818293 0.969445 … 0.614237 0.352431 0.596765

-0.139929 0.251658 0.148225 0.316045 -0.933486 -0.802227

0.0 0.0 0.0 0.0 0.0 0.0

0.717123 -0.516784 -0.195463 -0.723069 -0.0663023 0.0174128Defining the SDE

We define the drift and diffusion term of the qubit. The SDE doesn't preserve the norm of the quantum state. To ensure the normalization of the state, we add a DiscreteCallback after each time step. Further, we use a NoiseGrid from the DiffEqNoiseProcess package, as one possibility to simulate a 1D Brownian motion. Note that the NN is placed directly into the drift function, thus the control parameter Ω is continuously updated.

# Define SDE

function qubit_drift!(du, u, p, t)

# expansion coefficients |Ψ> = ce |e> + cd |d>

ceR, cdR, ceI, cdI = u # real and imaginary parts

# Δ: atomic frequency

# Ω: Rabi frequency for field in x direction

# κ: spontaneous emission

Δ, Ωmax, κ = p.myparameters

nn_weights = p.p_nn

Ω = (nn(u, nn_weights, st)[1] .* Ωmax)[1]

@inbounds begin

du[1] = 1 // 2 * (ceI * Δ - ceR * κ + cdI * Ω)

du[2] = -cdI * Δ / 2 + 1 * ceR * (cdI * ceI + cdR * ceR) * κ + ceI * Ω / 2

du[3] = 1 // 2 * (-ceR * Δ - ceI * κ - cdR * Ω)

du[4] = cdR * Δ / 2 + 1 * ceI * (cdI * ceI + cdR * ceR) * κ - ceR * Ω / 2

end

return nothing

end

function qubit_diffusion!(du, u, p, t)

ceR, cdR, ceI, cdI = u # real and imaginary parts

κ = p[end]

du .= false

@inbounds begin

#du[1] = zero(ceR)

du[2] += sqrt(κ) * ceR

#du[3] = zero(ceR)

du[4] += sqrt(κ) * ceI

end

return nothing

end

# normalization callback

condition(u, t, integrator) = true

function affect!(integrator)

integrator.u .= integrator.u / LA.norm(integrator.u)

end

callback = DEC.DiscreteCallback(condition, affect!, save_positions = (false, false))

CreateGrid(t, W1) = DNP.NoiseGrid(t, W1)

Zygote.@nograd CreateGrid #avoid taking grads of this function

# set scalar random process

W = sqrt(myparameters.dt) * randn(typeof(myparameters.dt), size(myparameters.ts)) #for 1 trajectory

W1 = cumsum([zero(myparameters.dt); W[1:(end - 1)]], dims = 1)

NG = DNP.NoiseGrid(myparameters.ts, W1)

# get control pulses

p_all = CA.ComponentArray(; p_nn,

myparameters = [myparameters.Δ; myparameters.Ωmax; myparameters.κ])

# define SDE problem

prob = SDE.SDEProblem{true}(

qubit_drift!, qubit_diffusion!, vec(u0[:, 1]), myparameters.tspan,

p_all;

callback, noise = NG)SDEProblem with uType Vector{Float32} and tType Float32. In-place: true

Non-trivial mass matrix: false

timespan: (0.0f0, 1.0f0)

u0: 4-element Vector{Float32}:

0.68275476

-0.139929

0.0

0.7171233Compute loss function

We'd like to prepare the excited state of the qubit. An appropriate choice for the loss function is the infidelity of the state ψ(t) with respect to the excited state. We create a parallelized EnsembleProblem, where the prob_func creates a new NoiseGrid for every trajectory and loops over the initial states. The number of parallel trajectories and the used batch size can be tuned by the kwargs trajectories=.. and batchsize=.. in the solve call. See also the parallel ensemble simulation docs for a description of the available ensemble algorithms. To optimize only the parameters of the neural network, we use pars = [p; myparameters.Δ; myparameters.Ωmax; myparameters.κ]

# compute loss

function g(u, p, t)

ceR = @view u[1, :, :]

cdR = @view u[2, :, :]

ceI = @view u[3, :, :]

cdI = @view u[4, :, :]

p[1] *

Statistics.mean((cdR .^ 2 + cdI .^ 2) ./ (ceR .^ 2 + cdR .^ 2 + ceI .^ 2 + cdI .^ 2))

end

function loss(p_nn; alg = SDE.EM(), sensealg = SMS.BacksolveAdjoint(autojacvec = SMS.ReverseDiffVJP()))

pars = CA.ComponentArray(; p_nn,

myparameters = [myparameters.Δ, myparameters.Ωmax, myparameters.κ])

u0 = prepare_initial(myparameters.dt, myparameters.numtraj)

function prob_func(prob, i, repeat)

# prepare initial state and applied control pulse

u0tmp = deepcopy(vec(u0[:, i]))

W = sqrt(myparameters.dt) * randn(typeof(myparameters.dt), size(myparameters.ts)) #for 1 trajectory

W1 = cumsum([zero(myparameters.dt); W[1:(end - 1)]], dims = 1)

NG = DNP.NoiseGrid(myparameters.ts, W1)

SDE.remake(prob; p = pars, u0 = u0tmp, callback, noise = NG)

end

ensembleprob = SDE.EnsembleProblem(prob; prob_func, safetycopy = true)

_sol = SDE.solve(ensembleprob, alg, SDE.EnsembleThreads();

sensealg,

saveat = myparameters.tinterval,

myparameters.dt,

adaptive = false,

trajectories = myparameters.numtraj, batch_size = myparameters.numtraj)

A = convert(Array, _sol)

l = g(A, [myparameters.C1], nothing)

# returns loss value

return l

endloss (generic function with 1 method)Visualization

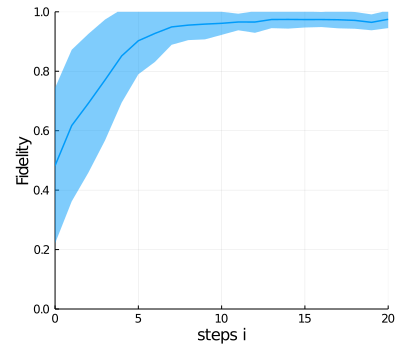

To visualize the performance of the controller, we plot the mean value and standard deviation of the fidelity of a bunch of trajectories (myparameters.numtrajplot) as a function of the time steps at which loss values are computed.

function visualize(p_nn; alg = SDE.EM())

u0 = prepare_initial(myparameters.dt, myparameters.numtrajplot)

pars = CA.ComponentArray(; p_nn,

myparameters = [myparameters.Δ, myparameters.Ωmax, myparameters.κ])

function prob_func(prob, i, repeat)

# prepare initial state and applied control pulse

u0tmp = deepcopy(vec(u0[:, i]))

W = sqrt(myparameters.dt) * randn(typeof(myparameters.dt), size(myparameters.ts)) #for 1 trajectory

W1 = cumsum([zero(myparameters.dt); W[1:(end - 1)]], dims = 1)

NG = DNP.NoiseGrid(myparameters.ts, W1)

SDE.remake(prob; p = pars, u0 = u0tmp, callback, noise = NG)

end

ensembleprob = SDE.EnsembleProblem(prob; prob_func, safetycopy = true)

u = SDE.solve(ensembleprob, alg, SDE.EnsembleThreads();

saveat = myparameters.tinterval,

myparameters.dt,

adaptive = false, #abstol=1e-6, reltol=1e-6,

trajectories = myparameters.numtrajplot,

batch_size = myparameters.numtrajplot)

ceR = @view u[1, :, :]

cdR = @view u[2, :, :]

ceI = @view u[3, :, :]

cdI = @view u[4, :, :]

infidelity = @. (cdR^2 + cdI^2) / (ceR^2 + cdR^2 + ceI^2 + cdI^2)

meaninfidelity = Statistics.mean(infidelity)

loss = myparameters.C1 * meaninfidelity

@info "Loss: " loss

fidelity = @. (ceR^2 + ceI^2) / (ceR^2 + cdR^2 + ceI^2 + cdI^2)

mf = Statistics.mean(fidelity, dims = 2)[:]

sf = Statistics.std(fidelity, dims = 2)[:]

pl1 = Plots.plot(0:(myparameters.Nintervals), mf,

ribbon = sf,

ylim = (0, 1), xlim = (0, myparameters.Nintervals),

c = 1, lw = 1.5, xlabel = "steps i", ylabel = "Fidelity", legend = false)

pl = Plots.plot(pl1, legend = false, size = (400, 360))

return pl, loss

end

# callback to visualize training

visualization_callback = function (state, l; doplot = false)

println(l)

if doplot

pl, _ = visualize(state.u)

display(pl)

end

return false

end#7 (generic function with 1 method)Training

We use the Adam optimizer to optimize the parameters of the neural network. In each epoch, we draw new initial quantum states, compute the forward evolution, and, subsequently, the gradients of the loss function with respect to the parameters of the neural network. sensealg allows one to switch between the different sensitivity modes. InterpolatingAdjoint and BacksolveAdjoint are the two possible continuous adjoint sensitivity methods. The necessary correction between Ito and Stratonovich integrals is computed under the hood in the SciMLSensitivity package.

# optimize the parameters for a few epochs with Adam on time span

# Setup and run the optimization

adtype = OPT.AutoForwardDiff()

optf = OPT.OptimizationFunction((x, p) -> loss(x), adtype)

optprob = OPT.OptimizationProblem(optf, p_nn)

res = OPT.solve(optprob, OPO.Adam(myparameters.lr),

callback = visualization_callback,

maxiters = 100)

# plot optimized control

visualization_callback(res, loss(res.u); doplot = true)false

References

[1] Schäfer, Frank, Pavel Sekatski, Martin Koppenhöfer, Christoph Bruder, and Michal Kloc. "Control of stochastic quantum dynamics by differentiable programming." Machine Learning: Science and Technology 2, no. 3 (2021): 035004.